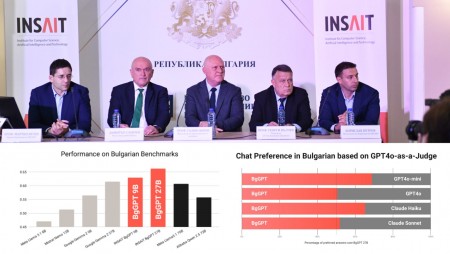

INSAIT (Institute for Computer Science, Artificial Intelligence, and Technology) announces the release of three state-of-the-art AI models, a 2.6 billion, a 9 billion and a 27 billion parameter model, targeting the Bulgarian language. These models demonstrate unprecedented performance in Bulgarian, outpacing much larger ones such as Qwen-72B and Llama3-70B, as well as similar-sized models, while retaining robust English language capabilities. INSAIT’s 2.6B model significantly outperforms open models of similar size in Bulgarian. All three models are freely available and can be used to empower businesses and government institutions in building AI-based assistants.

Interestingly, beyond benchmarks, INSAIT’s 27B model significantly surpasses GPT-4o-mini (free version of GPT-4) and rivals GPT-4o (paid version of GPT-4) in Bulgarian chat performance, according to GPT-4o itself, which was used as a judge across thousands of real-world conversations from around 100 different topics. The results are similar when compared to Anthropic’s Haiku and Sonnet (large) models.

INSAIT’s models are built on top of Google’s Gemma 2 family of models, with various additional improvements, including continuous pre-training on around 100 billion tokens in Bulgarian, and a novel instruction-fine tuning and model merging scheme based on new research that appeared in EMNLP’24, a top conference in natural language processing. This new Branch-and-Merge scheme ensures that models improve on a target skill, such as Bulgarian understanding and generation while avoiding catastrophic forgetting of already acquired skills in the base models. The method is widely applicable and its utility is demonstrated beyond Bulgarian.

“The result shown by INSAIT is significant because it demonstrates that a country can develop its own state-of-the-art AI models by relying on open models, advanced AI research and special data acquisition and training techniques,” said Prof. Martin Vechev, Full Professor at ETH Zurich and scientific director of INSAIT. “While our models target Bulgarian, the methods we developed are general and can be applied to other languages or general acquisition of new skills.”

Building on its 27-billion-parameter model, on November 23rd, INSAIT will launch the first public nationwide chat system. The system goes beyond a single model and includes further advances including alignment, retrieval subsystems and other components. This is the first time globally that a system of this scale has been launched by a government institution.